Letters from O’Brien #20

Source… https://escapingmasspsychosis.substack.com/p/social-media-manipulation

Several years ago, I began looking into the utility of astroturfing campaigns on social media, and the role these campaigns play in the manipulation of thought and opinion. Since then, I have begun to realise the extent of the psychological manipulation we are under, and just how much of it comes from the subversion of thought via social media.

There are a few key platforms which have shot up in popularity since I began looking into this phenomena, but the most prolific among them is Reddit. Originally a messaging board with the ability to create niche communities, the site has since turned into one of the most visited locations on the internet. Why? Well I guess there could be any number of psychological explanations, but what is evident is that the potential for manipulation is very high in this space.

THE ALLURE OF LEGITIMACY

The power of social media is primarily in its ability to generate a sense of real engagement between interconnected individuals and communities. It generates an allure similar to that of an event invitation – you’re included, wanted, special. One acts as if he or she is interacting with people close to them, despite only a fraction of the online interactions being with actual real-life friends or family. The majority of interaction and engagement comes from vaguely detailed accounts, but this doesn’t seem to stop one from being swayed. Twitter and Reddit are great examples of this ‘disconnect’ form actual human beings and real relationships.

This is extremely important to understand, and once you understand this, you can begin to see how this can be manipulated. Everyone, for the most part, is familiar with the popularised methods of public subversion – the mass nudging of the mind in modern propaganda. Media outlets publish stories pushing a narrative, people buy into or call it out, and the cycle repeats. Cycles within cycles, layers upon layers, what may look superficial is often just the veneer of a deep and well construed campaign.

ASTROTURFING

Astroturfing – as it has come to be known – is a well known method of ideological manipulation. While most people are at least partially aware of the term, not many actually understand the concept behind it. As with classical forms of propaganda, astroturfing works on the assumption that the thoughts and opinions of certain individuals or groups can be swayed, simply by presenting them with particular sets of information.

Imagine I work for an astroturfing operation and I want to change someones view on the United States foreign policy. There is a recent development in the news. The United States has recognised Morocco as the rightful owner of a conflicted region in the south of the country, known as the Western Sahara. The Polisario Front – an independence movement, claims the land for themselves. (By the way this is a real ongoing conflict.)

Now this opinion gets posted on social media – say twitter. Realistically you will have some first hand opinions from Moroccans and members of the Polisario front. We could think of these as the most effected, and therefor most reliable opinions. Then outside of that small core sphere you have second place opinions from geopolitical experts and foreign supporters. And lastly, you have internationals who simply share there moderate or poorly informed opinion. This last one is important, because even if the opinion isn’t that of an expert, it still has some sway.

Now lets take a step back. For a foreign government, this is a perfect time to subtly change the public’s opinion on American foreign policy, and to do that we will swing their opinion towards sympathising towards the Polisario. The idea is to expand the allure of public opinion in a particular direction.

There are several ways of doing this. The first – and most obvious – is to simply delete opinions which disagree with the narrative you want to push. Of course this would require you to have access to a social media database, or at least communicate with their moderators, but this is entirely possible for organisations or governments with the right amount of money on offer. This has been done many times before, and continues to be done, however there is one major disadvantage to this; if I was to simply start squashing dissenting voices in order to change what the public will find when they read through a Twitter post and its responses, it may change their opinion in the short term. But the people who’s opinions you really want to change – the ones we know will take action – are likely to do their own research. Those who’s voices have been silenced on Twitter will take to a different platform and spread the same opinion there, and to make matters worse for whoever is trying to change the narrative, the silenced voice will call out Twitter and its silencing of dissent. If this happens enough times with enough people who have been silenced, everyone will see through the façade. The legitimacy of Twitters moderation will take a hit, not to mention that those silenced voices will be taken more seriously – people will instinctively think that the deleted opinions on US foreign policy must be the truth, or the correct view, hence why it was initially silenced.

This is why deleting comments, banning users and taking down posts will eventually lead to an astroturfing campaign failure. We’ve seen this happen many times over the years with blatant social media manipulating in the form of suppression. This has only resulted in the ‘controversial’ opinions being sidelined, taking on followers, and then returning in force.

Rather, the astroturfing campaigner must take a different approach – made easy with access to the front end of a site like Twitter. This approach will be to inject new opinions into the online sphere. These opinions will require the ‘astroturfers’ to create fake profiles, fake personalities and most importantly a sense of real engagement from other users. If a post announcing the US support of Morocco gets posted, and 100 out of the 150 responses are against the actions of the US, then we are on the right track. Lets say out of the 100 responses disagreeing, 50 are fake astroturfed accounts. People reading these negative responses will subconsciously lean towards the critical view, simply out of the belief that it must be the correct opinion. But to make things even more convincing, we have to make sure that the anti-US policy responses are boosted to the top. They have a few retweets, a few likes, and maybe even a response or two agreeing with the opinion. Again, this is a fine art, if it looks fake, people will notice, and if its not received well or pushed to the bottom, people won’t take it seriously, or simply won’t see it at all.

For the average Twitter user, who is likely both uninformed on US-Moroccon relations and not interested enough to do their own research, their opinions will be shaped almost entirely by what they see the crowd doing online. We have seen this recently with the Ukraine-Russia conflict. Rather than realising the geopolitical and historical precedent for the conflict, the entirety of the war is summarised as ‘Ukraine good, Russia bad, the end of the matter’.

But let’s go one step further. As mentioned earlier there are several layers of legitimacy beyond the amount of likes and retweets – and that is the perceived legitimacy of the opinion being posted or commented. The most convincing is hearing opinions from first hand witnesses; the Moroccans and Polisario. Whether they are for or against the opinion is irrelevant. Then we have geopolitical experts, avid supporters, or anyone else who appears to have researched knowledge on the topic. These yet again hold a strong subconscious swing on public opinion. This may even include those who claim to be form neighbouring regions, such as those from Spain or Mauritania. These all need to be carefully curated, encouraged in the right direction, or simply fabricated (if found out you can pass the blame to a fabricated third part or an enemy – it matters not after the campaign has succeeded).

It becomes obvious that the manipulation of a user-base’s opinions comes down to the subtle art of presenting legitimacy, not simply silencing dissenting voices. If done properly, such a manipulation campaign can go practically unnoticed. Now the level of detail one can take this too is pretty much endless.

REDDIT IS FAKE

Now I want to turn to Reddit, one of the most blatantly manipulated websites on the internet. Reddit stands as one of the best examples of large scale group-think and hyper-manipulation of opinions. You can think of it as an enormous, yet poorly orchestrated, astroturfing campaign, and as such there is a bit to learn from it. To begin with, Reddit has a ‘social credit score’ system known as ‘Karma’. Users can like or dislike comments or posts, which dictate a score. This score then dictates the success of the post or comment in the algorithm. But more importantly, it gives the illusion that the underlying opinion is accepted by others. It acts as a type of social validation, which conversely impacts whoever is reading it – similar to seeing other opinions on Twitter, Instagram, etc.

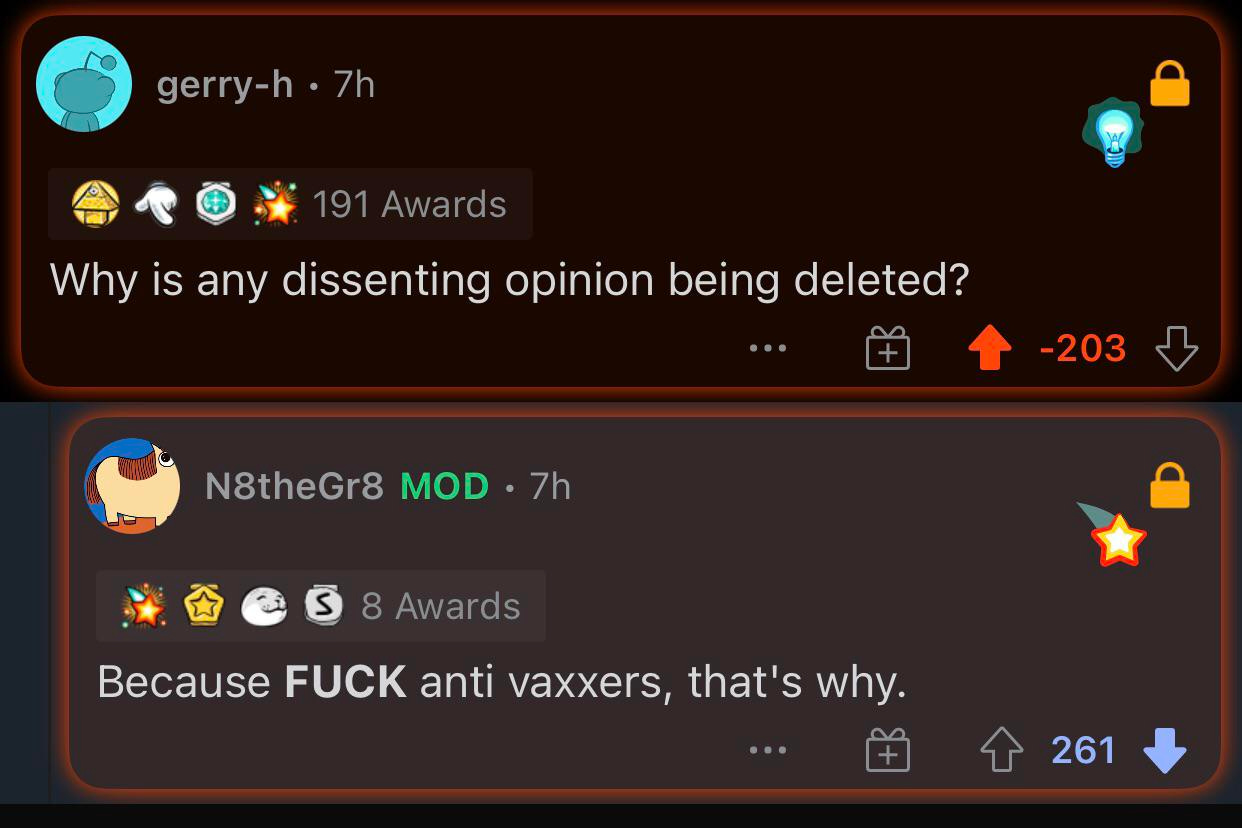

Seeing through this veneer of ‘legitimacy’ is difficult, but still possible. For example many conflicting opinions on Reddit are manipulated through the use of this karma mechanic. Take these two comments as an example. Two people are having a disagreement. Now upon initial observation the first comment has been heavily disliked, showing people disagree, and the response has many likes, ‘proving’ that people agree with it. Now lets take a closer look…

On Reddit you can give people comment awards – I’m not entirely sure what the utility of receiving awards is, but it is common for people to give you an award if they agree with you. Its like the karma system, but stronger. You can think of it as a sticker you pin on someones post or comment to show that you like it or agree with it enough to expend excess effort proving it. The first comment, despite being heavily disliked, has received almost 200 awards – which is huge in comparison to the votes on both comments. This is the first sign that there is manipulation. The response – which appears to be well received – only has 8 awards. Thus, we have discovered what appears to be a form astroturfing. This could have been achieved using bots, or simply by having backdoor access to the site, allowing the displayed parameters to be changed. Which one is the manipulated comment and which is the legitimate one? It is hard to say, but given the content of the comments, I would say the response (championing the ‘current thing’) is the correct one.

Now this is a clear flaw in the system. If this was part of an astroturfing campaign done properly, this mistake wouldn’t have been made, and it would have been near impossible to notice. Bots or parameter manipulation could be used to shun the initial comment as universally disliked, and likewise be used to validate the response as something closer to the publicly validated opinion.

CONSPIRACY?

This goes deeper – there is an emerging theory that these ideas are put into practice using Ask Reddit, one of the largest communities on the platform. The idea is smart and simple – the community claims to be oriented around ‘asking others on the platform’ a particular question. This adds an immediate layer of legitimacy, since the presupposition is that these are actual people asking question formulated in their own heads, and then answered by other random people on the site.

Some of the opinions, questions, answers, and viewpoints cannot be taken seriously as they are worded in a particularly biased fashion, or they ask a question which alludes to a particular answer and thus primes people to offer a certain answer. And too often the questions which rise to the top of this community contain one or more of the these attributes. Many have noticed that it seems unlikely that even half of these would legitimately attract interest if left to the unbiased opinions of the public.

It doesn’t take much thought to quickly realise how effective this could be to psychologically manipulate whoever actively reads the questions and answers pushed to the top and given an air of popularity. A pre-generated question, which is immediately voted to the top of the community using bots or simply back-end manipulation, followed by pre-generated answers being pushed to the top of the comment section – and we have a completely fabricated popular opinion.

SELLING ACCOUNTS

I also want to point out the buying and selling of social media accounts. There is an ever growing market for accounts on Twitter, Reddit, and so forth. The reason is simple – you can create and grow a particular account that has obvious social value. For example, going back to a previous point, you could create an account on Twitter focusing on geopolitics. You can garner a following by posting actual data, researched opinions and so forth. Then you can put it on the market. Some astroturfing ‘firm’ purchases it, and now they can utilise it to slowly and subtly change opinions.

I imagine the most effective trick is to diversify the outlets of information through a broad spread of account types. Political accounts are very specific, and in my opinion, people take their political opinion less seriously than if they saw a non-political figure making a political statement (since it assumes that something is so pressing or important that even those outside of the political sphere hold an opinion on it). Again, I believe the trick is subtlety. Accounts associated with economics, cooking, tech, architecture. The standard content continues on, but with slight ideological undertones which are then implanted in the minds of the accounts followers – the tech commentator making a political remark, the cooking guru aligning in subtle ways with a certain ideology.

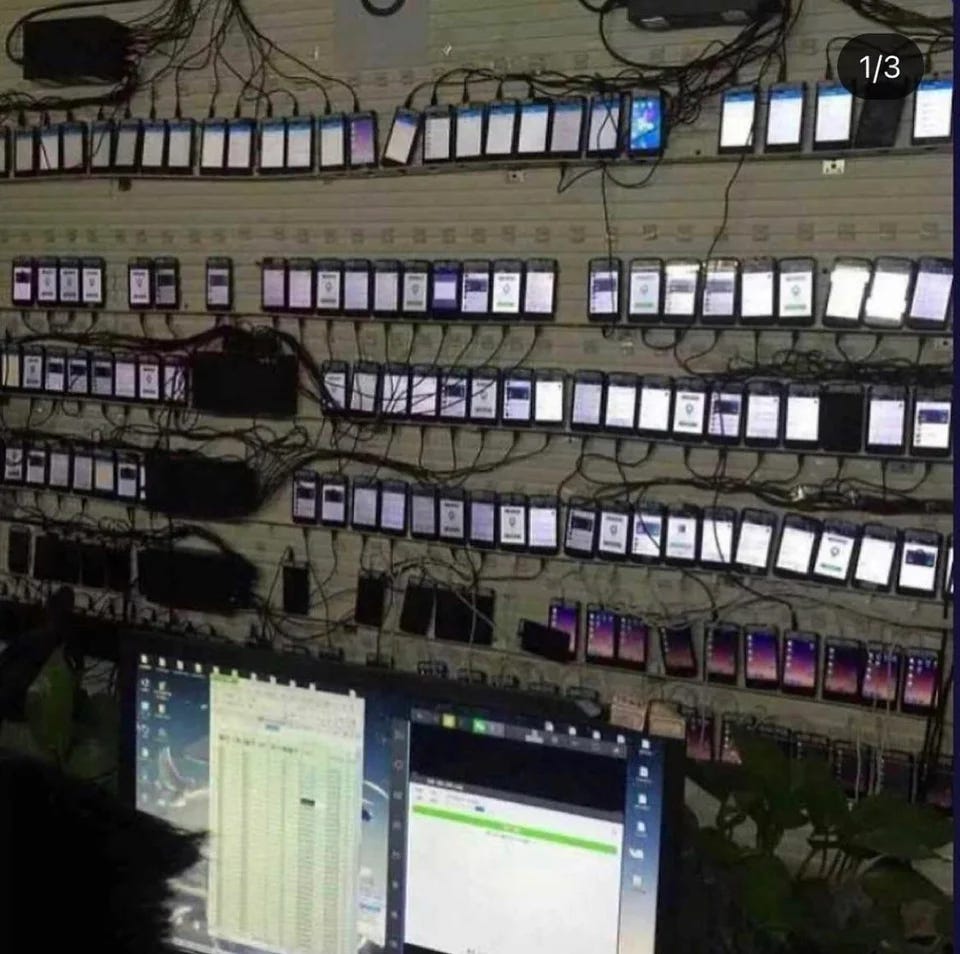

The possibilities with this technology is endless. This is why the CCP has invested so much time, money, and manpower into massive online armies running such fake accounts. From what I have seen, they appear to be tasked with taking part in online discourse as any normal person would, but with ideological undertones. These may not even align with CCP doctrine. Accounts could be set up to mimic left or right leaning Americans, and then used to trigger online arguments. The trick – in China’s case – would be to do whatever best demoralises their enemy, not purely spread Marxist doctrine. A divided house will fall. This same approach can be replicated by anyone with enough time, money, and patience to swing opinions.

LAYERS OF LEGITIMACY

For those of us without access to Twitters algorithm, this is the best way to go about expanding an astroturfing campaign – continue to add layers of legitimacy. Each layer of legitimacy makes it increasingly difficult for those researching these accounts to find fault with them. You can think about this as a type of safeguard which can be increasingly built up to slow the discovery of manipulation.

For example, the first layer of legitimacy would be creating a post which looks like it was written by a real person. The second layer is posting said comment on an account which, upon initial observation, looks legitimate. The third is having a profile with a large audience, and the allure that it is still legitimate.

I can keep expanding this in many directions. For example a forth layer of legitimacy would be interlinked accounts – an Instagram account, YouTube, Facebook, and so on. One could even add a face to this account. Anyone who clicks through to see where an opinion came from would be greeted by what seems like a real person. Of course, this is increasingly easy to do, as algorithmically generated faces can be used to generate entire profiles of supposed ‘content’, which gives the impression of an actual human being behind it. All of this is simply another way to sway popular opinion one way or another.

This is all a social media extrapolation of a broader phenomenon. One study took a dozen people and had them take a questionnaire. There were a set of binary questions. One was right, and the other wrong (sometimes very obviously wrong). Over half of the subjects had been told to choose the wrong answer, which they were alerted to before hand. These subjects went first. Following this, the other subjects who were unaware of this simply re-validated the wrong answer that the previous subjects had picked.

Many similar tests have been undertaken, and the point is this; many people will simply align themselves with the majority, be it in answering a question or responding to a particular scenario, even if they know that the majority are incorrect. Why? Who knows – ask Winston, he’s the psych. There are likely many theories as to why this is often the case, but the point is that it happens. The past two years have proven a willingness by certain types of people to do quite literally anything to prove support for the apparent societal trend, even if they know it is wrong.

WHY REDDIT IS TERRIBLE

Now let me turn my attention back to Reddit. For about a decade I have observed the growth of the platform. Coincidentally, I began moving away from it as a source of information and help. What once may have been a legitimate platform is now the most blatantly manipulated social media website, (maybe with the possible exception of Tik Tok). I have already touched on just how easy it is to manipulate user opinions through algorithmic changes.

One big issue – which came to my attention several years ago – is that the site is essentially run by ideologically radical individuals. Communities are managed by ‘unpaid’ moderators, who’s task it is to enforce guidelines. Since the site is compartmentalised, each moderator is essentially given complete power over whichever community they are assigned to. In some cases, moderators will run dozens or even hundreds of these communities. Many among them are blatantly power mad, and exercise their power in the most obviously ridiculous ways. This came to my attention years ago, after several neutral tech discussions were censored and then shut down for blatantly ideological reasons. Things such as this lead to a huge decrease in respect for the platform, and this appeared to be shared by many other people. No longer was it a space for discussion, but something akin to a pit of reprobates. A phrase emerged which perfectly encompassed this feeling; “nobody tells their coworkers that they use Reddit”.

This power abuse has been used continually since the 2016 elections. Users are flagged by algorithms, their comments and posts are deleted, and then they are banned. People who point this out or highlight moderator issues are also silenced or banned. As a result, everything on the site (and part of the reason I no longer found utility in it) becomes biased. Neutral discussion – regardless of its context or nature – is wrangled in under the ideological theory espoused by the site.

On top of this, unlike Instagram, which may be the most mentally damning platform for young people in my humble opinion, Reddit and its lack of ‘personality’ make it one of the strangest forms of social media. As such, it makes sense that it would be a hot spot for manipulation campaigns. Since there is little ‘human element’ to the website, I find it both alluring and repulsive, in the sense that users either believe that they are talking to other human beings, or realise that they are most likely talking to an algorithm, in which case any utility (tech advice, for example) is lost.

One final point on why I feel that Reddit stands alone in its ability to sway opinion… Reddit – of all social media sites – does its best at creating an idealogical echo chamber. (yes I realise all gatherings on social media can do this, but Reddit is on steroids in this regard). This is particularly the case when looking at location relevant communities, such as communities oriented around Europe, Australia, Japan, and so forth. As real people will note, these ‘communities’ – which are made to paint a picture of the location in question – post disproportionately large amounts of woke and politicised content, much of which isn’t even location-relevant, and much of which comes from accounts based outside of said region. This gives it away as heavily inorganic and fabricated.

NO TRUST

So why do I stand in stronger opposition to Reddit than any other platforms? I blame Reddit for the strongest promotion and propagation of woke culture, beginning around 2013, than any other platform. I was using the platform at the time. When communities oriented around tech development, geopolitics, and history began promoting woke ideas, I knew something was not right. This was not free speech, it wasn’t even contextually relevant speech, but rather a platform’s willing attempt to push a particular narrative and censor opposition.

The demonisation of ideas such as individualism and free speech, promoted on the level of the administration of the platform itself, is undeniably wrong. This degree of manipulation could potentially spill onto all social media platforms within the next few years, and I can only hope that this will not be the case. But for now, the extremities of anti-individualism, hyper-conformism, and the systematic justification of ideological possession are limited to a few select platforms. Don’t fall into the ideological trap of Reddit, which can, and often does, convince its users that they are alone in their criticisms of the ‘current thing’.

One extremely evident example of this is the overwhelming and uncritical support of the United States current leadership. I don’t want to delve too deeply into mainstream American politics, I’m not American, and I’m no expert, but I think this is a good example of how the platform distorts public opinion. To begin with, Reddit (as with Twitter) appears to have come under extreme manipulation leading up to and immediately following the 2020 election. This was enforced through manipulated user feeds and heavy censorship. Just as with the 2016 election, this manipulation coincided with an increasing number of users blindly praising Democratic nominees while simultaneously demonising Republicans.

However, the past two years have been vastly different to the pre-election stage. The US population was largely divided in 2020, with many people openly supporting a Democratic candidate (often for the sake of getting Republicans out of office). This is no longer the case. In fact, the criticism of the current Democratic government is unlike anything I have seen, in my opinion surpassing the rage following the Republican win in 2016. This is reflected in left wing forums, including openly admitted Marxists groups, in which I have seen a unified distrust of the current government and its willingness to partake in Identity Politics (classic Marxists see this as counter productive to class mobilisation, which is ironically correct). Despite this obvious shift in the political climate, if one were to visit Twitter or Reddit, it would seem as though ‘the current issue’ and the Democrat politicians in power are more popular than ever… by a long shot!

Perhaps I am wrong, but it seems to me that those critical of the US governments absurd and ironically anti-democratic policies are met with generic responses on Twitter, Reddit, and even YouTube. My hypothesis – which I stumbled across in a Marxist forum of all places – is that GPT-3 level automation is being used in a mass astroturfing campaign to re-validate the idea of Democratic supremacy over competing parties. Why do I think this? I have seen – as have others who also noticed this – that posts mentioning particular keywords, phrases, or general talking points critical of identity politics and destructive ideology are met with responses labelling them ‘Fascists’, ‘Trump supporters’, and so forth. These responses are all very similar in their phrasing and wording, and will occur without mention of political allegiance, or even if political allegiance is admitted as far Left. Unless a subset of real life people (who I believe exist, but not in such large numbers) are being continually activated by particular keywords, then I believe that algorithm generated content is making up a large part of what one sees online, and particularly on Reddit and Twitter.

Now on that topic, the use of the older GPT-2 algorithm to generate content for social media is possible. In fact, it was tested at a large scale on Reddit itself. It came to my attention while researching this very topic. Named the ‘subreddit simulator’, this community on Reddit was self isolated to allow for the propagation of bot posts and comments. It did this by picking random communities on the website, data scraping them, and then allowing the algorithm to generate a post. From there, bot accounts would be allowed to comment at one-minute intervals. The results are practically indiscernible from real people. In fact, when actual users are allowed to comment, it becomes impossible to distinguish human from algorithm. This was all done by nonprofessionals, using a small amount of sample data, and running an older iteration of the advanced GPT algorithm. Now imagine if these sites wished to execute such manipulation professionally and on a large scale.

This plays into the so called ‘dead internet theory’ which has been floating around for years. The basic premise being that almost all interaction online is not real. It is either faked through algorithmic expression, or at least manipulated and distorted to censor or push particular elements of an online interaction. The theory holds that the majority of falsity comes from comments, views, likes, shares, and other metrics which bear little depth. However, it also holds that online conversations between actual people can fall prey to this same manipulation, although this is far more complex. For example, someone may begin a conversation over text, which in actuality is being monitored and manipulated by an algorithm. This algorithm would be tapped into other data streams, allowing for seamless integration and subtle manipulation which does not stifle the communication of important details (which would expose the algorithm, especially if both parties have access to each others phones).

Again, I am unsure of just how deep these ideas cut within the context of such platforms as Reddit and Twitter. For starters, it is presumed that bot accounts have to be limited in their utility and limited in their numbers. The reason behind this is a presumption that once artificial intelligence behind bot accounts advances beyond a certain point, they will begin generating content which contradicts what is expected of a human. This would include long and incomprehensible sentences or equations only understood by other bots running off of an equally evolved algorithm, thus setting them apart from normal humans.

LOSING OURSELVES

I tie most of the manipulation and possibility for control back to societies inability to contain itself in the online space. The younger generations are the first to grow up entirely immersed in a paradigm which we truly do not understand. I say this literally. Understanding something in a technical sense is not the same as understanding it in relation to oneself. The online space is like an abstraction of reality, or as the postmodernists would label it, a simulacrum. It takes the veneer of reality and mirrors it, but it is not the same. Instagram – a personal dislike of mine – does exactly this; it mirrors reality based off of rationalised attributes and builds an exaggerated hyper-reality out of it, which neither reflects actuality nor is distinguishable from it. It becomes an extrapolation and mockery of itself.

A good example of this is the act of texting. It is no longer just about contacting someone over a long distance. It is an act of itself. This is why it is addictive. It is why people will text continually whilst in social settings with actual people around them. I believe such things as texting, posting photos of oneself online and monitoring the reaction, checking out the updates from others, and so forth are not mere social interactions, but are also a type of isolated projection of ones own psychopathology. It is not merely a gateway, but a mirror which reflects an element of oneself back inwards without awareness.

I do not think that we understand our relationship to the online world. In the past, the happenings of life occurred almost exclusively within real-time observable time and space. Rare exceptions were books, stories, and song where we could be transported ‘beyond’ oneself momentarily. However, people understood themselves in relation to these things. A story was understood within the context of the self. In contrast, we do not understand ourselves in relation to social media. To give an example, social media is not reality, nor is it even a snapshot or narrow view of reality, yet we subconsciously assume that it is. As I mentioned earlier, it is something akin to a simulacrum of the actual happenings in the world, upon which a new reality is built which cannot be distinguished from the old (actuality).

If we cannot understand ourselves in relation to social media platforms, then it should come as little surprise that the possibility of exploitation is huge. The gaps, flaws, and misunderstandings are everywhere, and anyone who spends enough time thinking over them will eventually figure out how to exploit them to their own advantage.

I believe Reddit and Twitter are the tip of the iceberg within a broader social problem and I’m certain many of you would agree. These manipulations are obviously wrong, and our inability to understand ourselves in relation to the technology we possess just compounds the issue. We have been thrown into the deep end as a modernised society, unable to truly comprehend how our abstracted online landscapes (which we unwillingly project a part of ourselves into) will impact us in the future or assist us in navigating the now.

Unlike reading a book, looking at the news, or extracting meaning from a religious text, the social media landscape is more complex in other-self relationships, boundaries are uncertain, abstraction is fundamental, and as such is more open to the evils of propaganda.

I think people are becoming increasingly fractured, superimposing parts of themselves onto ever-evolving aspects of opaque technology, and finding it hard to rediscover themselves apart from such things.

Moving forward, people must wake up to the blatant manipulation made possible by this online obsession. They must disconnect themselves from their abstracted online realities, and see things for what they truly are. And this coming, not from an old man who’s out of touch with technology, but from someone in his mid 20s who has been immersed in it.

This comes back to educating oneself, not merely in a philosophically obtuse way, but educating oneself on how the world actually works. Possess logic and reason. No matter the complexity, philosophical and ideological arguments will crumble when wielded against someone who possesses the underrated knowledge of logic and empiricism, able to strip away the language and see the flawed fundamentals which often have no legs to stand on. Establishing oneself on the foundation of logic and reason will set him apart, able to fight against any corrupted ideas which come his way.

Reddit is a dangerous place – hic sunt dracones.

Source… https://escapingmasspsychosis.substack.com/p/social-media-manipulation